Building trust through transparency: How we’re shaping Real Talent™

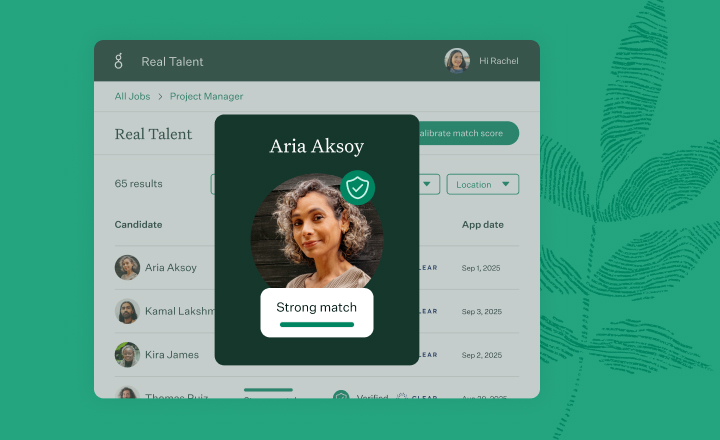

When we first introduced Greenhouse Real Talent™, we shared our vision for helping recruiters and hiring teams cut through the noise at the top of the funnel to find the right people faster, reduce risk and build trust in every hire.

Since that announcement, one question has come up again and again: How can we trust AI to make hiring fairer, not riskier?

It’s a fair question. AI in hiring has sparked both optimism and skepticism, and we take that responsibility seriously. Trust isn’t something we can declare; it’s something we must earn through transparency.

That’s why this update focuses on what we’re doing to make Real Talent a model for responsible innovation through bias audits, fairness testing, candidate identity verification and privacy protection.

Why does transparency matter so much right now?

Recruiting has changed dramatically in the last few years. Application volumes are at record highs, but so are misrepresentation and fraud. At the same time, AI-generated resumes, automated job applications and even deepfakes are making it harder to tell what’s real.

That’s created an urgency for technology that recruiters can trust, tools that make their jobs easier without sacrificing fairness, privacy or control.

At Greenhouse, we believe transparency is the foundation of trust. Our goal with Real Talent is not only to help recruiters make faster, smarter decisions, but to show exactly how those decisions are informed. So, we’re designing Real Talent around three principles:

- Transparency – Everyone involved should understand how and why results appear.

- Accountability – We continuously test, audit and improve fairness, not just once, but throughout the product’s life.

- Human control – AI assists recruiters but never replaces their judgment.

How are we making AI in Real Talent fair and trustworthy?

The truth is that fairness isn’t something we assume; it’s something we design for, measure, monitor and prove.

That’s why every AI-driven capability in Real Talent, particularly Talent Matching (which helps recruiters prioritize candidates based on job-related data), is subject to monthly third-party bias audits conducted by Warden AI, an independent expert in responsible AI evaluation.

These audits aren’t one-time events. They are ongoing and built into how we develop and release every update to Real Talent.

What exactly do the bias audits test for?

Each audit looks at two key dimensions of fairness:

- Disparate Impact Analysis – Measures whether outcomes differ across demographic groups. This helps ensure that no one group consistently receives more or fewer “fit” signals.

- Demographic Variable Testing – Tests how factors like names, gendered language or hobbies might influence the algorithm’s behavior, identifying hidden or unintended bias.

Warden evaluates our algorithms across 10 demographic categories, aligning with regulations like NYC Local Law 144, Colorado SB205, and California FEHA. They use a combination of proprietary data, fairness metrics and real-world validation to uncover even subtle disparities.

If an issue is detected, we retrain and retest the models before they’re released. No new version of Talent Matching can go live until it passes fairness and accuracy criteria. This process ensures that fairness isn’t an afterthought. It is a precondition for release.

Why publish the bias audit results publicly?

A lot of companies talk about responsible AI, and some publish one-time or annual audits. Fewer are willing to open up their systems to scrutiny on such a continual basis.

We believe accountability only works if it’s visible. That’s why we’re publishing our bias audit results publicly, in partnership with Warden AI.

Our statement is live on the Greenhouse website, and Warden AI has launched a dedicated dashboard that shows ongoing results. Customers, partners and even candidates will be able to view how Talent Matching performs across demographic groups and how those results improve over time.

This goes far beyond legal compliance. But to us, that’s the point. True transparency isn’t about meeting the minimum bar; it’s about raising it.

We want to empower customers to see, understand and trust how Real Talent operates. If we ever find areas for improvement, we’ll address them and make those findings public. That’s how we build confidence, not through claims, but through proof.

Is Real Talent making hiring decisions automatically?

No. And this distinction is critical.

Talent Matching does not make hiring decisions. It does not score, reject or advance candidates automatically. Instead, it helps recruiters manage large applicant pools more efficiently by surfacing candidates who align with job-specific criteria. Recruiters set the initial criteria and weighting, can see which criteria contributed to each match label and can adjust weightings at any time.

The goal isn’t to automate judgment; it’s to enhance visibility and reduce manual sorting, so recruiters can spend more time with real people and less time buried in applications that do not meet the basic criteria. In short, AI in Real Talent serves recruiters, not the other way around.

How are candidates protected in this process?

Fair technology has to work for everyone, not just employers. We know that candidate trust is just as important as customer trust. That’s why every component of Real Talent, including CLEAR® identity verification, was designed with privacy, fairness and transparency in mind.

What is CLEAR and how does it build trust at the top of the funnel?

As misrepresentation and fraud become more sophisticated and prevalent, verifying that an applicant is who they say they are has become essential. CLEAR helps solve this challenge directly within Greenhouse by verifying candidate identity securely, quickly, and transparently - using proven biometric technology, not AI.

Here’s how it works:

- Seamless experience: Candidates verify their identity inside MyGreenhouse using a simple selfie and ID check. Over 33 million CLEAR users can verify instantly, while new users complete a one-time setup for future reuse.

- Privacy-first approach: CLEAR shares only minimal data, such as name, email, phone, and pass/fail results, so recruiters see a simple “Verified” status, not personal details or documents.

- Data security: CLEAR protects your business and your users by keeping the most sensitive data stored securely and only with user consent – and maintains robust standards for data protection and privacy. CLEAR complies with applicable laws, including GDPR, CCPA, BIPA and PIPEDA.

- Candidate control: Verification is always optional, and candidates can delete their information from CLEAR at any time.

- Fairness and inclusion: CLEAR’s technology is independently evaluated by the Department of Homeland Security and NIST for consistent performance across demographics.

For recruiters, CLEAR builds confidence at the top of the funnel by confirming identity early without introducing bias.

For candidates, it provides a simple, respectful way to demonstrate authenticity and take control of their own data.

Transparency here means choice, clarity and control. Candidates should know what’s being shared, how it’s used and who can see it, and with CLEAR, they do.

How do these safeguards come together?

Transparency isn’t just a feature; it’s a framework that underpins everything about Real Talent.

- Recruiters get explainable AI they can see, understand and adjust.

- Candidates get control and privacy over their own identity data.

- Organizations get confidence that their tools are ethical, audited and compliant.

By combining audited AI with verified identity, Real Talent helps restore something that’s been missing in the hiring ecosystem: trust in the hiring process.

What’s next for Real Talent?

This month, we entered the closed beta phase, and customers are helping test Talent Matching and CLEAR verification in real-world hiring environments.

The purpose of this beta is not to move fast for the sake of speed, but to move responsibly, to validate accuracy, usability and fairness before full release. We’re working hand-in-hand with our Real Talent Advisory Council, including a group of leaders across talent acquisition and security, who are helping shape the product and hold us accountable to these values.

We’ll share more updates publicly, including audit outcomes, beta learnings and customer insights, as we move toward general availability early next year.

Why this matters

The rise of AI and identity verification in hiring has created new possibilities, but also new responsibilities. We’re not just building a faster way to hire; we’re building a more transparent, accountable and human one.

Real Talent reflects what we believe hiring technology should be: explainable, ethical and trustworthy by design. Because in an age of automation, the most powerful advantage any company can have is the one thing AI can’t replicate: trust.

If you’d like to be part of our beta or follow our progress, join the Real Talent waitlist and help us shape the future of fair and transparent hiring.